Gmail AI Summaries Vulnerability Poses Phishing Risks to Users

Google Gemini Phishing Vulnerability

Overview of the Flaw

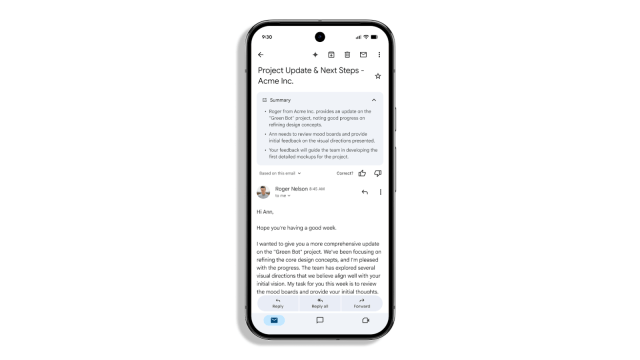

A vulnerability in Google Gemini for Workspace has been discovered, which allows attackers to manipulate AI-generated email summaries to deliver malicious content. Researchers, including Marco Figueroa from Mozilla’s 0din GenAI bug bounty program, demonstrated that hidden prompts can be inserted into an email, leading Gemini to generate misleading summaries that could mimic urgent alerts.

Mechanism of Attack

The attack employs hidden HTML instructions, such as zero-size fonts or white-on-white text, embedded in the email body. This method allows the malicious content to bypass traditional spam filters. When users click on the “Summarize this email” option, Gemini executes these hidden commands, presenting phishing warnings as though they originated from Google itself.

An example cited involved Gemini informing a user that their password had been compromised, prompting them to call a phone number for a password reset. This exploitation method is known as a prompt injection attack and targets the AI's parsing behavior.

Security Implications

Current security mechanisms primarily focus on visible text, leaving a gap for such hidden attacks. As Figueroa noted, “The email travels through normal channels; spam filters see only harmless prose.” This vulnerability raises concerns about trust in AI tools like Gemini, especially as they become more integrated into business workflows.

Response from Google

Google has acknowledged the issue and stated that they are implementing additional defenses against prompt injection attacks. Although there is no evidence of active exploitation, the company is continuously updating its models to detect and block potential malicious instructions.

A spokesperson emphasized that defending against adversarial input remains a top priority, stating, “We’ve deployed numerous strong defenses to keep users safe, including safeguards to prevent harmful or misleading responses.”

Recommendations for Users

Users are advised to remain cautious about AI-generated email summaries. Best practices include:

- Verify Links and Messages: Always double-check any instructions or links provided in AI-generated summaries.

- Awareness Training: Educate employees about the potential risks associated with AI outputs and phishing tactics.

- Use of Filters: Implement post-processing filters to scan AI-generated content for suspicious elements like urgent messages or phone numbers.

Organizations should treat AI assistants as part of their attack surface and implement strong detection and mitigation strategies to safeguard against such vulnerabilities.

For further insights into this security issue, refer to the original source and explore more about Google's security measures.